Get Started, Part 6: Deploy your app

Estimated reading time: 13 minutesPrerequisites

- Install Docker version 1.13 or higher.

- Get Docker Compose as described in Part 3 prerequisites.

- Get Docker Machine as described in Part 4 prerequisites.

- Read the orientation in Part 1.

-

Learn how to create containers in Part 2.

-

Make sure you have published the

friendlyhelloimage you created by pushing it to a registry. We’ll be using that shared image here. -

Be sure your image works as a deployed container. Run this command, slotting in your info for

username,repo, andtag:docker run -p 80:80 username/repo:tag, then visithttp://localhost/. - Have the final version of

docker-compose.ymlfrom Part 5 handy.

Introduction

You’ve been editing the same Compose file for this entire tutorial. Well, we have good news. That Compose file works just as well in production as it does on your machine. Here, we’ll go through some options for running your Dockerized application.

Choose an option

If you’re okay with using Docker Community Edition in production, you can use Docker Cloud to help manage your app on popular service providers such as Amazon Web Services, DigitalOcean, and Microsoft Azure.

To set up and deploy:

- Connect Docker Cloud with your preferred provider, granting Docker Cloud permission to automatically provision and “Dockerize” VMs for you.

- Use Docker Cloud to create your computing resources and create your swarm.

- Deploy your app.

Note: We will be linking into the Docker Cloud documentation here; be sure to come back to this page after completing each step.

Connect Docker Cloud

You can run Docker Cloud in standard mode or in Swarm mode.

If you are running Docker Cloud in standard mode, follow instructions below to link your service provider to Docker Cloud.

- Amazon Web Services setup guide

- DigitalOcean setup guide

- Microsoft Azure setup guide

- Packet setup guide

- SoftLayer setup guide

- Use the Docker Cloud Agent to bring your own host

If you are running in Swarm mode (recommended for Amazon Web Services or Microsoft Azure), then skip to the next section on how to create your swarm.

Create your swarm

Ready to create a swarm?

-

If you’re on Amazon Web Services (AWS) you can automatically create a swarm on AWS.

-

If you are on Microsoft Azure, you can automatically create a swarm on Azure.

-

Otherwise, create your nodes in the Docker Cloud UI, and run the

docker swarm initanddocker swarm joincommands you learned in part 4 over SSH via Docker Cloud. Finally, enable Swarm Mode by clicking the toggle at the top of the screen, and register the swarm you just created.

Note: If you are Using the Docker Cloud Agent to Bring your Own Host, this provider does not support swarm mode. You can register your own existing swarms with Docker Cloud.

Deploy your app on a cloud provider

-

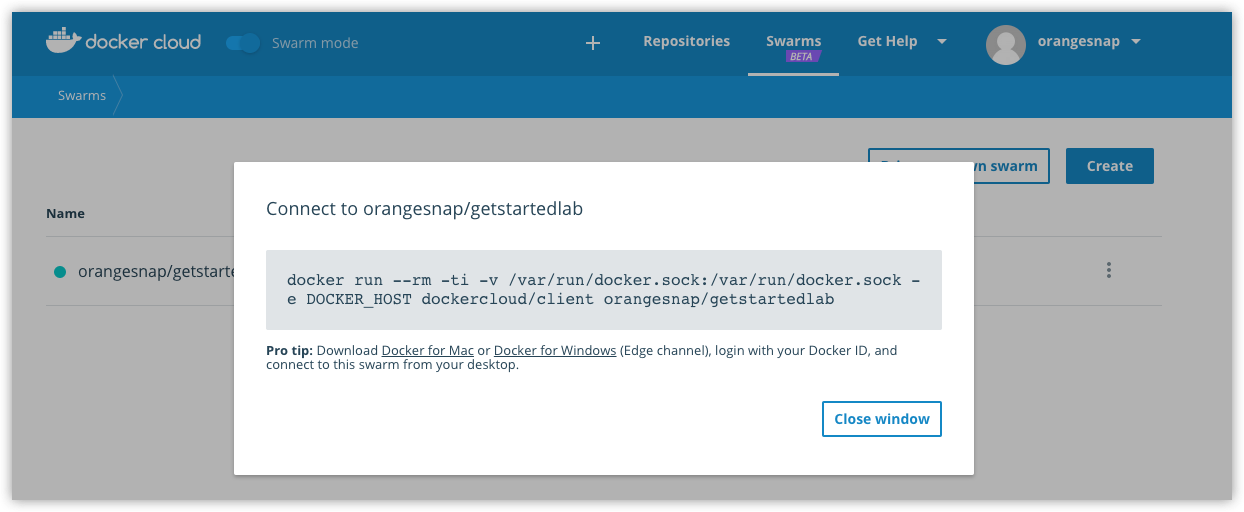

Connect to your swarm via Docker Cloud. There are a couple of different ways to connect:

- From the Docker Cloud web interface in Swarm mode, select Swarms at the top of the page, click the swarm you want to connect to, and copy-paste the given command into a command line terminal.

Or …

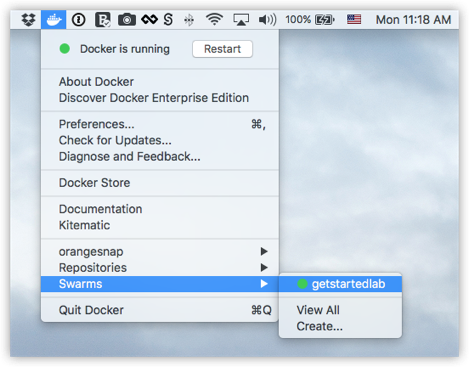

- On Docker for Mac or Docker for Windows, you can connect to your swarms directly through the desktop app menus.

Either way, this opens a terminal whose context is your local machine, but whose Docker commands are routed up to the swarm running on your cloud service provider. You directly access both your local file system and your remote swarm, enabling pure

dockercommands. -

Run

docker stack deploy -c docker-compose.yml getstartedlabto deploy the app on the cloud hosted swarm.docker stack deploy -c docker-compose.yml getstartedlab Creating network getstartedlab_webnet Creating service getstartedlab_web Creating service getstartedlab_visualizer Creating service getstartedlab_redisYour app is now running on your cloud provider.

Run some swarm commands to verify the deployment

You can use the swarm command line, as you’ve done already, to browse and manage the swarm. Here are some examples that should look familiar by now:

-

Use

docker node lsto list the nodes.[getstartedlab] ~ $ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 9442yi1zie2l34lj01frj3lsn ip-172-31-5-208.us-west-1.compute.internal Ready Active jr02vg153pfx6jr0j66624e8a ip-172-31-6-237.us-west-1.compute.internal Ready Active thpgwmoz3qefdvfzp7d9wzfvi ip-172-31-18-121.us-west-1.compute.internal Ready Active n2bsny0r2b8fey6013kwnom3m * ip-172-31-20-217.us-west-1.compute.internal Ready Active Leader -

Use

docker service lsto list services.[getstartedlab] ~/sandbox/getstart $ docker service ls ID NAME MODE REPLICAS IMAGE PORTS x3jyx6uukog9 dockercloud-server-proxy global 1/1 dockercloud/server-proxy *:2376->2376/tcp ioipby1vcxzm getstartedlab_redis replicated 0/1 redis:latest *:6379->6379/tcp u5cxv7ppv5o0 getstartedlab_visualizer replicated 0/1 dockersamples/visualizer:stable *:8080->8080/tcp vy7n2piyqrtr getstartedlab_web replicated 5/5 sam/getstarted:part6 *:80->80/tcp -

Use

docker service ps <service>to view tasks for a service.[getstartedlab] ~/sandbox/getstart $ docker service ps vy7n2piyqrtr ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS qrcd4a9lvjel getstartedlab_web.1 sam/getstarted:part6 ip-172-31-5-208.us-west-1.compute.internal Running Running 20 seconds ago sknya8t4m51u getstartedlab_web.2 sam/getstarted:part6 ip-172-31-6-237.us-west-1.compute.internal Running Running 17 seconds ago ia730lfnrslg getstartedlab_web.3 sam/getstarted:part6 ip-172-31-20-217.us-west-1.compute.internal Running Running 21 seconds ago 1edaa97h9u4k getstartedlab_web.4 sam/getstarted:part6 ip-172-31-18-121.us-west-1.compute.internal Running Running 21 seconds ago uh64ez6ahuew getstartedlab_web.5 sam/getstarted:part6 ip-172-31-18-121.us-west-1.compute.internal Running Running 22 seconds ago

Open ports to services on cloud provider machines

At this point, your app is deployed as a swarm on your cloud provider servers,

as evidenced by the docker commands you just ran. But, you still need to

open ports on your cloud servers in order to:

-

allow communication between the

redisservice andwebservice on the worker nodes -

allow inbound traffic to the

webservice on the worker nodes so that Hello World and Visualizer are accessible from a web browser. -

allow inbound SSH traffic on the server that is running the

manager(this may be already set on your cloud provider)

These are the ports you need to expose for each service:

| Service | Type | Protocol | Port |

|---|---|---|---|

web |

HTTP | TCP | 80 |

visualizer |

HTTP | TCP | 8080 |

redis |

TCP | TCP | 6379 |

Methods for doing this will vary depending on your cloud provider.

We’ll use Amazon Web Services (AWS) as an example.

What about the redis service to persist data?

To get the

redisservice working, you need tosshinto the cloud server where themanageris running, and make adata/directory in/home/docker/before you rundocker stack deploy. Another option is to change the data path in thedocker-stack.ymlto a pre-existing path on themanagerserver. This example does not include this step, so theredisservice is not up in the example output.

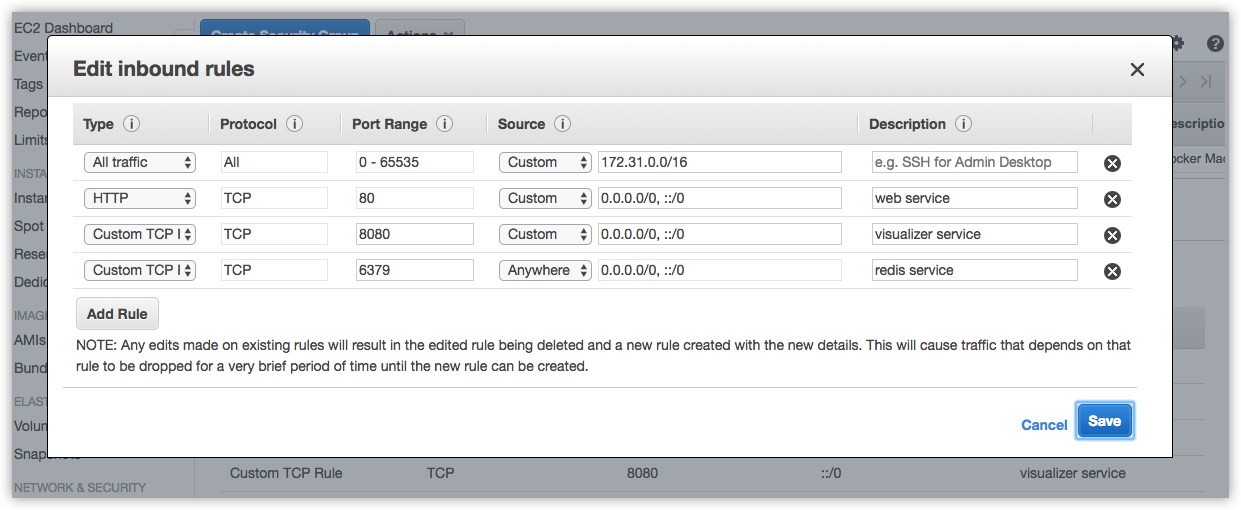

Example: AWS

-

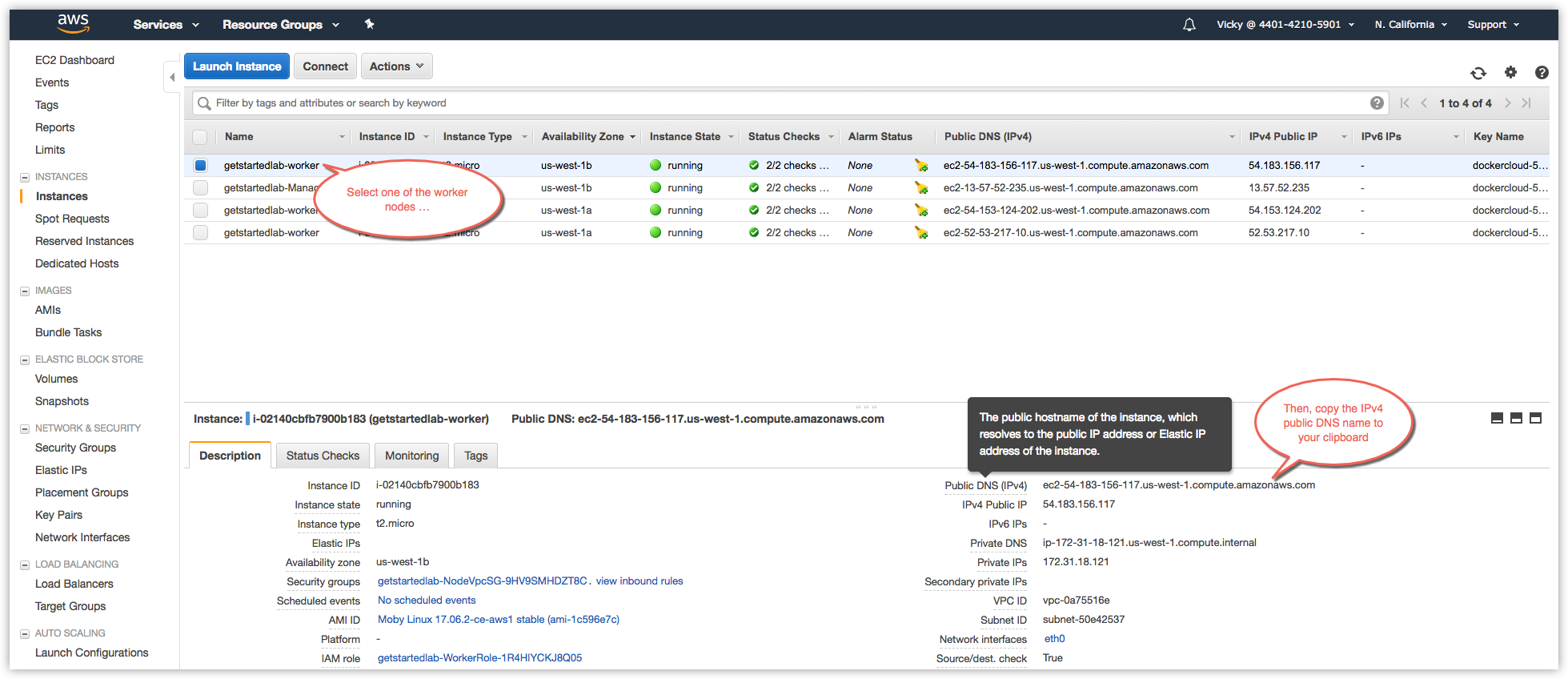

Log in to the AWS Console, go to the EC2 Dashboard, and click into your Running Instances to view the nodes.

-

On the left menu, go to Network & Security > Security Groups.

You’ll see security groups related to your swarm for

getstartedlab-Manager-<xxx>,getstartedlab-Nodes-<xxx>, andgetstartedlab-SwarmWide-<xxx>. -

Select the “Node” security group for the swarm. The group name will be something like this:

getstartedlab-NodeVpcSG-9HV9SMHDZT8C. -

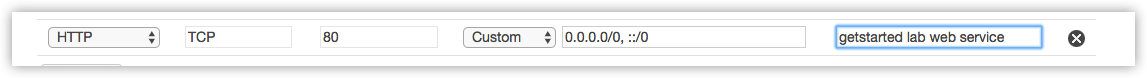

Add Inbound rules for the

web,visualizer, andredisservices, setting the Type, Protocol and Port for each as shown in the table above, and click Save to apply the rules.

Tip: When you save the new rules, HTTP and TCP ports will be auto-created for both IPv4 and IPv6 style addresses.

-

Go to the list of Running Instances, get the public DNS name for one of the workers, and paste it into the address bar of your web browser.

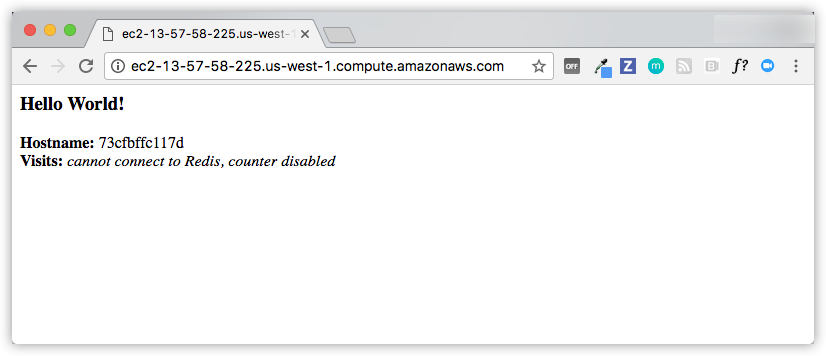

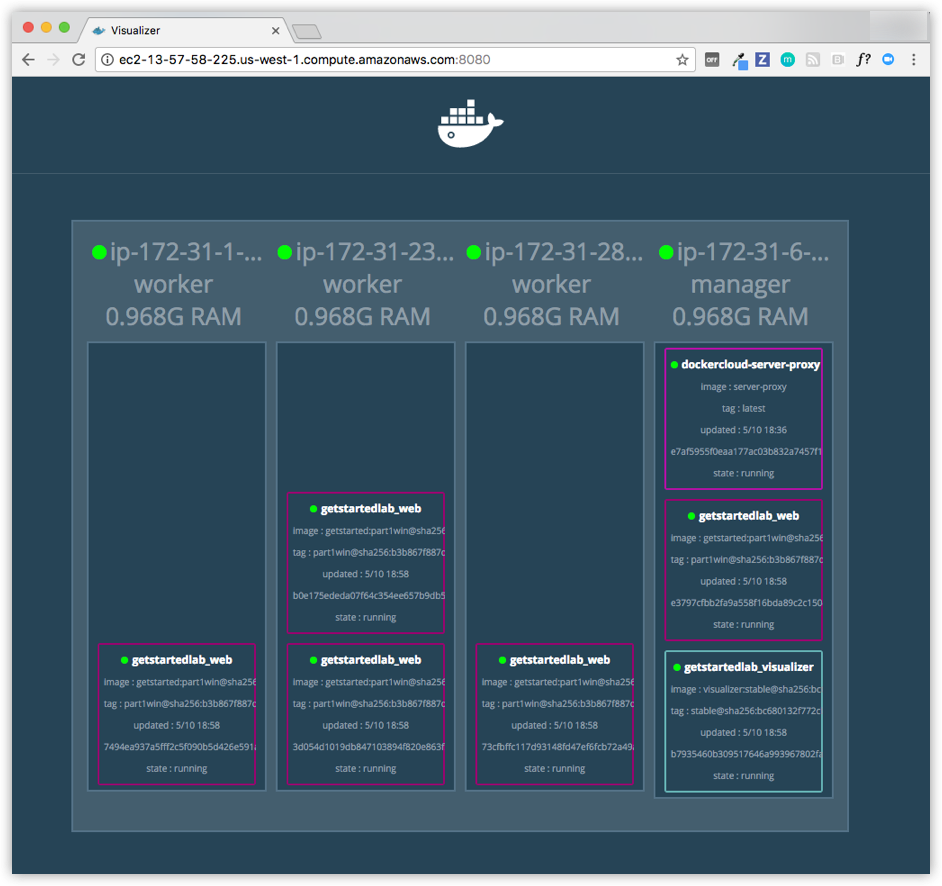

Just as in the previous parts of the tutorial, the Hello World app displays on port

80, and the Visualizer displays on port8080.

Iteration and cleanup

From here you can do everything you learned about in previous parts of the tutorial.

-

Scale the app by changing the

docker-compose.ymlfile and redeploy on-the-fly with thedocker stack deploycommand. -

Change the app behavior by editing code, then rebuild, and push the new image. (To do this, follow the same steps you took earlier to build the app and publish the image).

-

You can tear down the stack with

docker stack rm. For example:docker stack rm getstartedlab

Unlike the scenario where you were running the swarm on local Docker machine VMs, your swarm and any apps deployed on it will continue to run on cloud servers regardless of whether you shut down your local host.

Customers of Docker Enterprise Edition run a stable, commercially-supported version of Docker Engine, and as an add-on they get our first-class management software, Docker Datacenter. You can manage every aspect of your application via UI using Universal Control Plane, run a private image registry with Docker Trusted Registry, integrate with your LDAP provider, sign production images with Docker Content Trust, and many other features.

Take a tour of Docker Enterprise Edition

The bad news is: the only cloud providers with official Docker Enterprise editions are Amazon Web Services and Microsoft Azure.

The good news is: there are one-click templates to quickly deploy Docker Enterprise on each of these providers:

Note: Having trouble with these? View our setup guide for AWS. You can also view the WIP guide for Microsoft Azure.

Once you’re all set up and Datacenter is running, you can deploy your Compose file from directly within the UI.

After that, you’ll see it running, and can change any aspect of the application you choose, or even edit the Compose file itself.

Customers of Docker Enterprise Edition run a stable, commercially-supported version of Docker Engine, and as an add-on they get our first-class management software, Docker Datacenter. You can manage every aspect of your application via UI using Universal Control Plane, run a private image registry with Docker Trusted Registry, integrate with your LDAP provider, sign production images with Docker Content Trust, and many other features.

Take a tour of Docker Enterprise Edition

Bringing your own server to Docker Enterprise and setting up Docker Datacenter essentially involves two steps:

- Get Docker Enterprise Edition for your server’s OS from Docker Store.

- Follow the instructions to install Datacenter on your own host.

Note: Running Windows containers? View our Windows Server setup guide.

Once you’re all set up and Datacenter is running, you can deploy your Compose file from directly within the UI.

After that, you’ll see it running, and can change any aspect of the application you choose, or even edit the Compose file itself.

Congratulations!

You’ve taken a full-stack, dev-to-deploy tour of the entire Docker platform.

There is much more to the Docker platform than what was covered here, but you have a good idea of the basics of containers, images, services, swarms, stacks, scaling, load-balancing, volumes, and placement constraints.

Want to go deeper? Here are some resources we recommend:

- Samples: Our samples include multiple examples of popular software running in containers, and some good labs that teach best practices.

- User Guide: The user guide has several examples that explain networking and storage in greater depth than was covered here.

- Admin Guide: Covers how to manage a Dockerized production environment.

- Training: Official Docker courses that offer in-person instruction and virtual classroom environments.

- Blog: Covers what’s going on with Docker lately.